Case Study: Evading Playwright Detection on G2

Many sites employ sophisticated anti-scraping mechanisms to detect and block automated scripts. G2, a popular platform for software reviews, employs various measures to prevent automated access to its product categories pages.

In this case study we will explore the experience of scraping the G2 categories page using Playwright. We compare the results of a bare minimum script versus a fortified version.

Our goal is to scrape the product information without getting blocked.

Step 1: Standard Playwright Setup for Scraping G2

With our first approach We will use a basic Playwright script to navigate to the G2 product page and capture a screenshot

const playwright = require("playwright");

(async () => {

const browser = await playwright.firefox.launch({ headless: false });

const context = await browser.newContext();

const page = await context.newPage();

await page.goto("https://www.g2.com/categories");

await page.screenshot({ path: "product-page.png" });

await browser.close();

})();

- It launches a new Chromium browser, opens a new page, and navigates to G2 product page.

- In the standard setup, no specific configurations are made to evade detection.

- This means that the website will be detected.

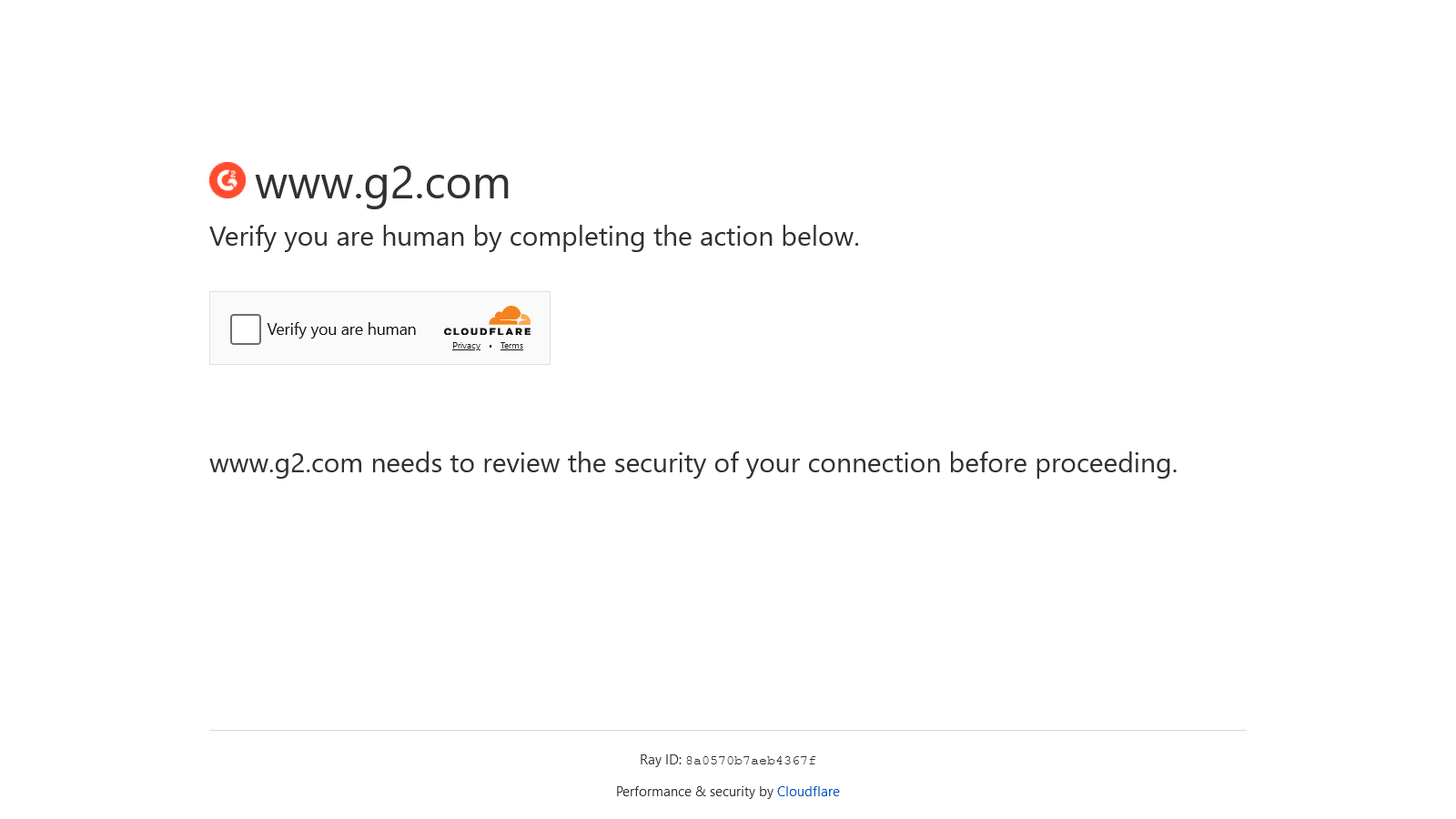

This is the output:

This script failed to retrieve the desired information. The page encountered CAPTCHA challenges. This result highlighted the limitations of a bare minimum approach, which does not mimic real user behavior.

Step 2: Fortified Playwright Script

To overcome these challenges, we fortified our script by modifying the browser-context and set-up session cookies. These modifications aimed to make our automated session appear more like a real user.

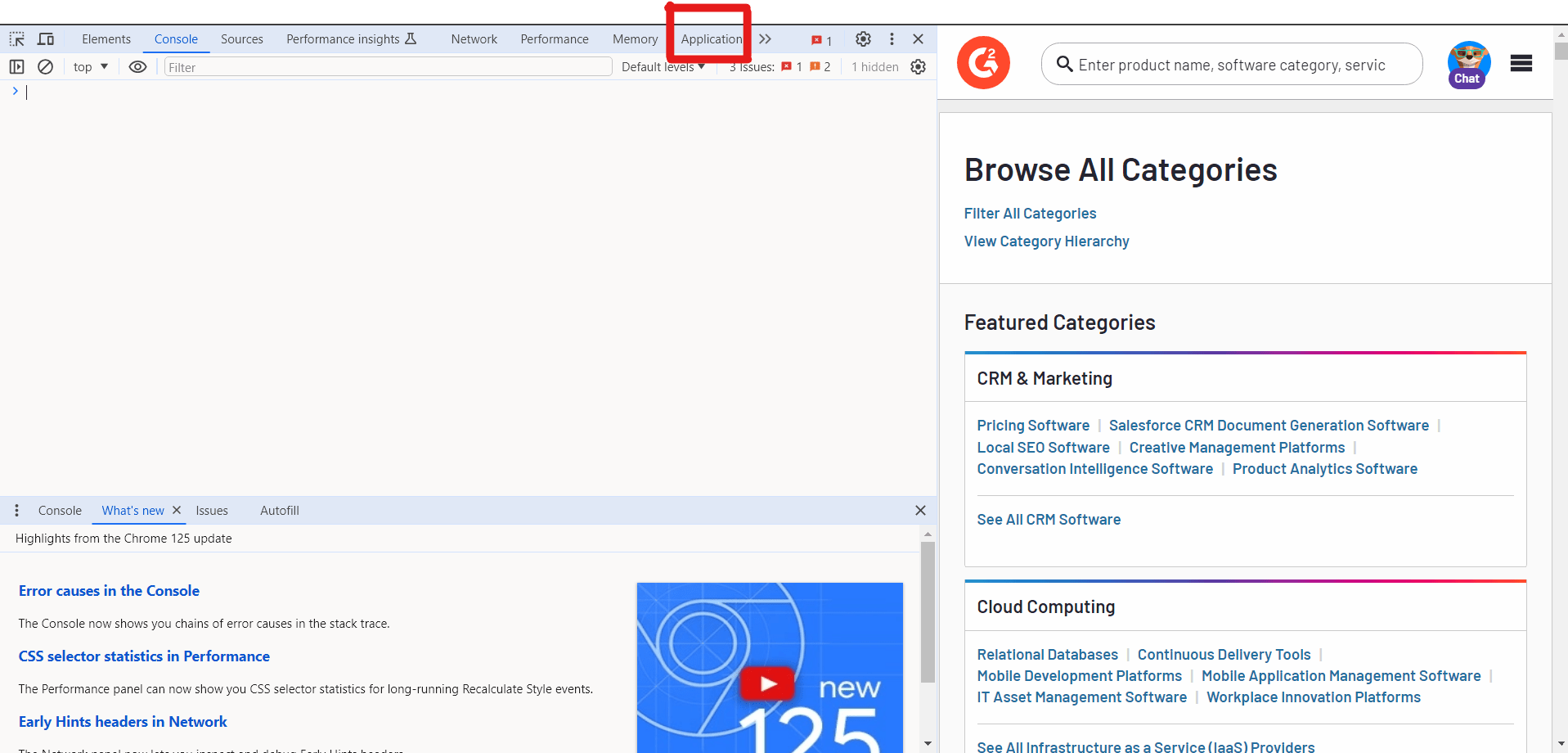

Finding Session Cookies 1. Open Browser Developer Tools: Navigate to the G2 categories page in a real browser (e.g., Chrome or Firefox).

-

Access Developer Tools: Right-click on the page and select "Inspect" or press to open the Developer Tools.Control + Shift + I

-

Navigate to Application/Storage: In Developer Tools, go to the "Application" tab in Chrome or "Storage" tab in Firefox.

-

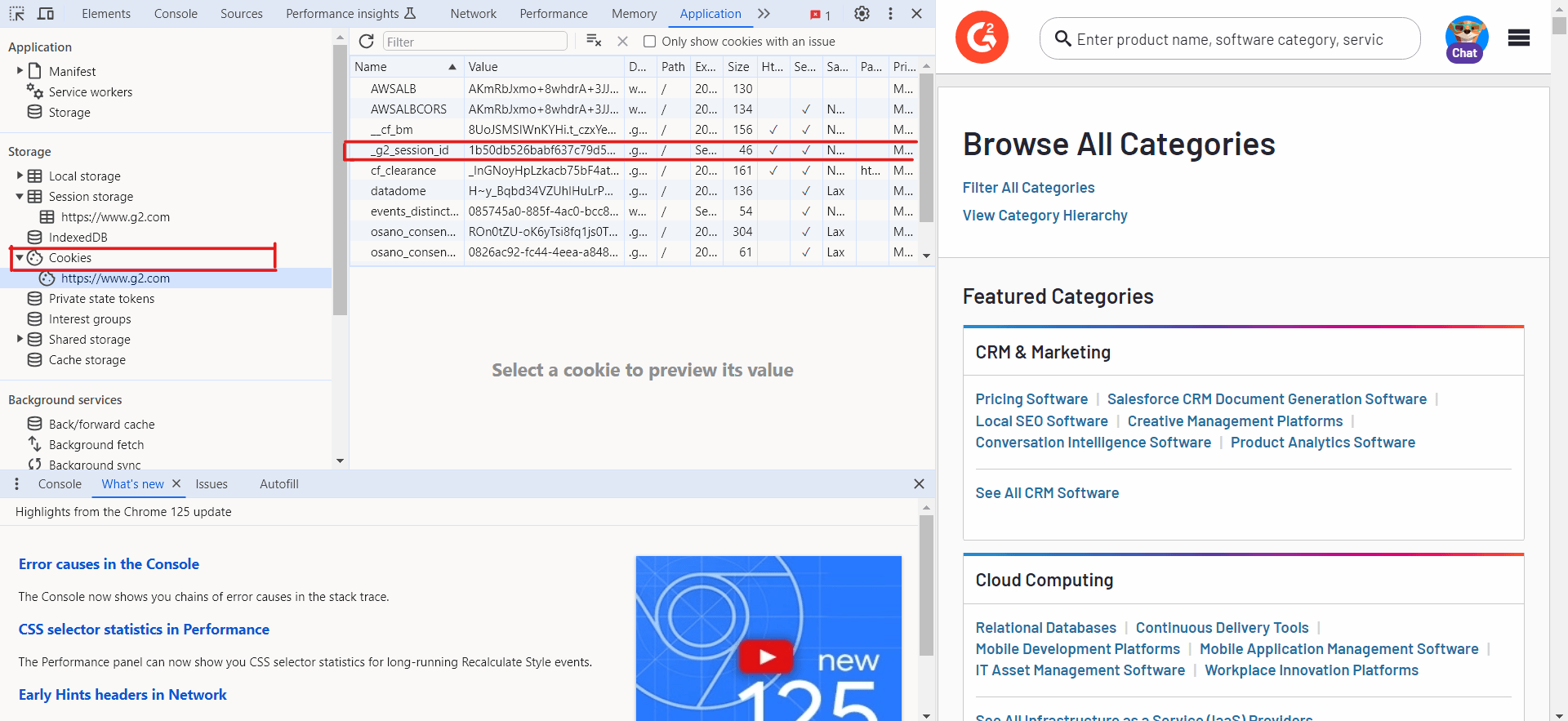

Locate Cookies: Under "Cookies," select the entry. Here, you will see a list of cookies set by the site.https://www.g2.com

-

Copy Session Cookie: Find the cookie named . Copy its value, as this will be used in your Playwright script._g2_session_id

Here's the fortified Playwright script that includes the browser context modification and session cookies:

const playwright = require("playwright");

(async () => {

const browser = await playwright.firefox.launch({ headless: false });

const context = await browser.newContext({

userAgent:

"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:127.0) Gecko/20100101 Firefox/127.0",

viewport: { width: 1280, height: 800 },

locale: "en-US",

geolocation: { longitude: 12.4924, latitude: 41.8902 },

permissions: ["geolocation"],

extraHTTPHeaders: {

"Accept-Language": "en-US,en;q=0.9",

"Accept-Encoding": "gzip, deflate, br",

Referer: "https://www.g2.com/",

},

});

// Set the session ID cookie

await context.addCookies([

{

name: "_g2_session_id",

value: "0b484c21dba17c9e2fff8a4da0bac12d",

domain: "www.g2.com",

path: "/",

},

]);

const page = await context.newPage();

await page.goto("https://www.g2.com/categories");

await page.screenshot({ path: "product-page.png" });

await browser.close();

})();

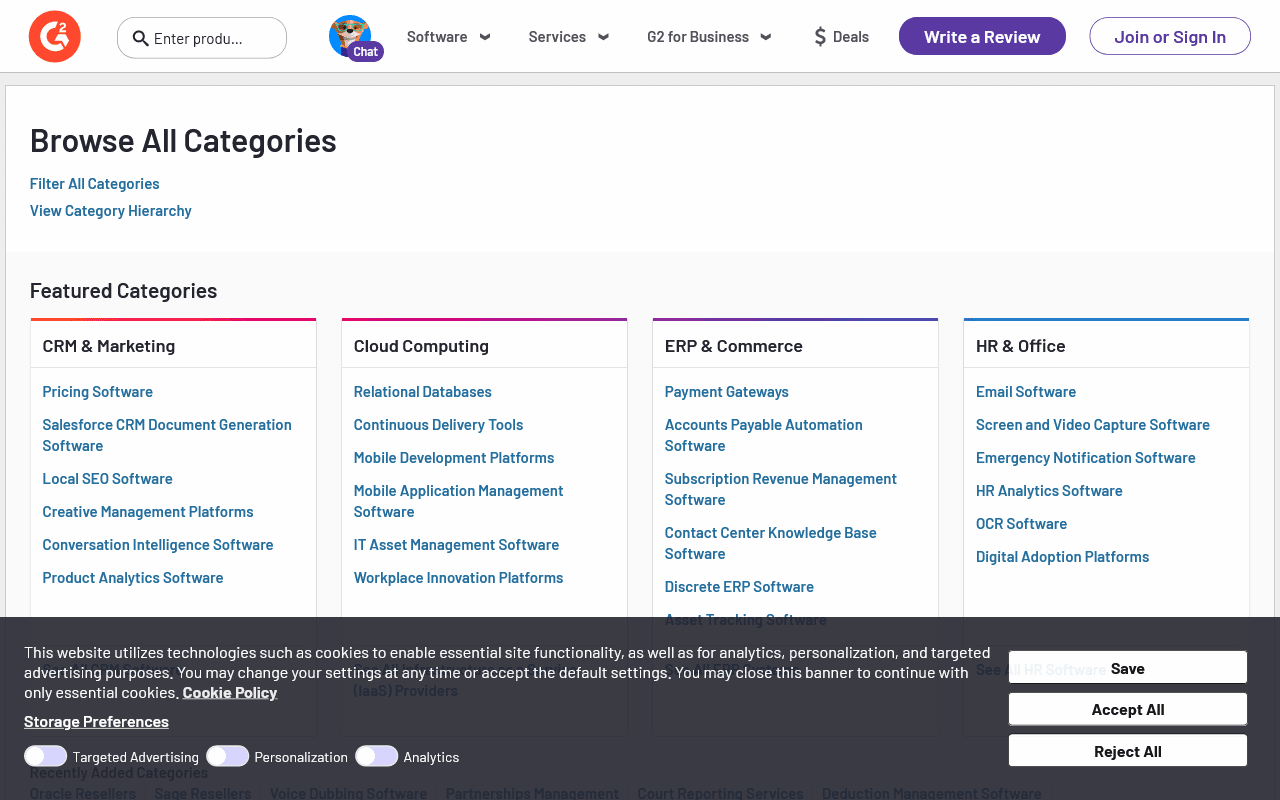

Outcome

With the fortified script, the page loaded correctly, and we successfully bypassed the initial bot detection mechanisms. The addition of user agent modification and session cookies helped in simulating a real user session, which was crucial for avoiding detection and scraping the necessary data.

Comparison

- Bare Minimum Script : Failed to bypass bot detection, leading to incomplete page loads and CAPTCHA challenges.

- Fortified Script : Successfully mimicked a real user, allowing us to load the page and scrape data without interruptions.