Strategies To Make Playwright Undetectable

You can use one or more of the following strategies to make your Playwright scraper undetectable. Each strategy can be tailored to enhance your scraping effectiveness while minimizing detection risks.

1. Use Playwright Extra With Residential Proxies

Playwright Extra is a library that helps disguise Playwright scripts to make them appear as human-like as possible. It modifies browser behavior to evade detection techniques like fingerprinting and headless browser detection.

This library, originally developed for Puppeteer, is known as and is designed to enhance browser automation by minimizing detection. Below, we will see it in action with an example demonstrating how to use this plugin. puppeteer-extra-plugin-stealth

- We will start by installing the required libraries:

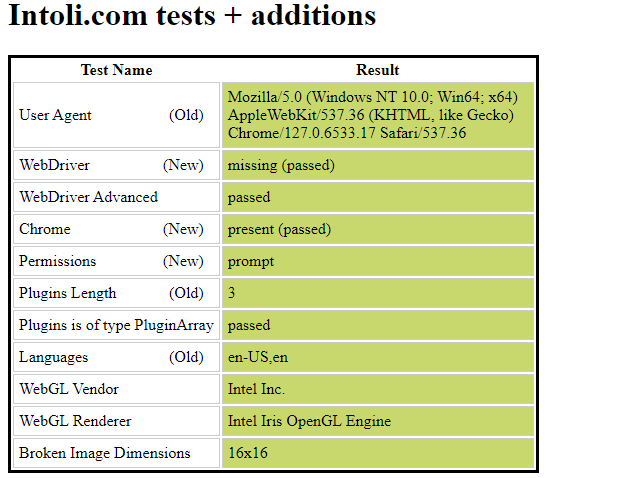

- We will navigate to bot.sannysoft, it is a website designed to test and analyze how well your browser or automation setup can evade detection by anti-bot systems.

We will also add a residential proxy to enhance our setup's stealth capabilities further. This comprehensive approach will help us ensure that our automated interactions closely mimic real user behavior, reducing the likelihood of detection.

const { chromium } = require("playwright-extra");

const stealth = require("puppeteer-extra-plugin-stealth")();

chromium.use(stealth);

// Replace with your residential proxy address and port

chromium.launch({ headless: true,

args: [ '--proxy-server=http://your-residential-proxy-address:port'] }).then(async (browser) => {

const page = await browser.newPage();

console.log("Testing the stealth plugin..");

await page.goto("https://bot.sannysoft.com", { waitUntil: "networkidle" });

await page.screenshot({ path: "stealth.png", fullPage: true });

console.log("All done, check the screenshot.");

await browser.close();

});

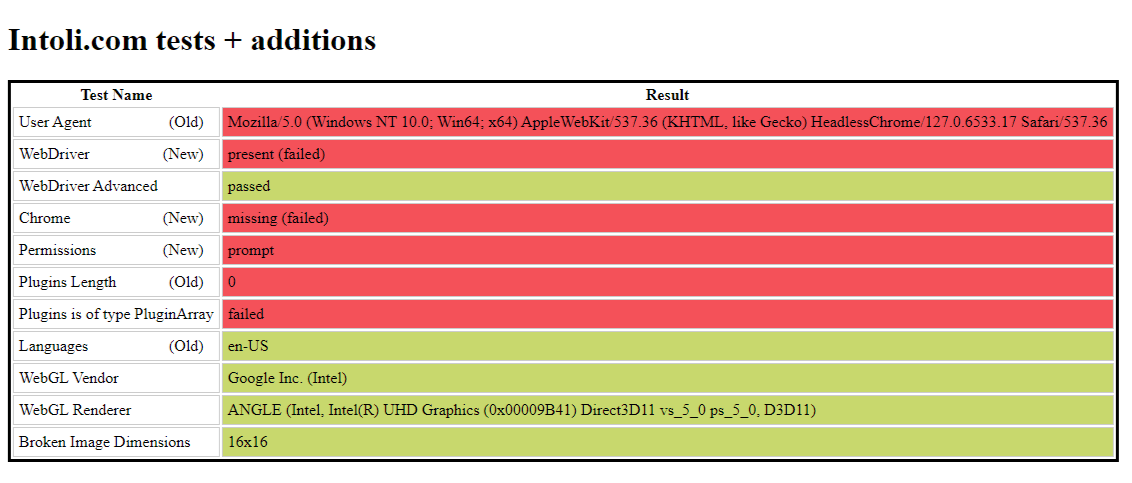

In contrast, a Playwright script without any evasion mechanisms fails several tests presented by the website.

The comparison highlights the effectiveness of using the stealth plugin and residential proxy for evading detection.

Residential and mobile proxies are typically more expensive than data center proxies because they use IP addresses from real residential users or mobile devices. ISPs charge for the bandwidth used, making it crucial to optimize resource usage.

2. Use Hosted Fortified Version of Playwright

Hosted and fortified versions of Playwright are optimized for scraping and include built-in anti-bot bypass mechanisms, including rotating residential proxies.

Below, we will explore the integration of Brightdata with Playwright, a leading provider of hosted web scraping solutions and proxies.

const playwright = require("playwright");

// Here we will input the Username and Password we get from Brightdata

const { AUTH = "USER:PASS", TARGET_URL = "https://bot.sannysoft.com" } =

process.env;

async function scrape(url = TARGET_URL) {

if (AUTH == "USER:PASS") {

throw new Error(

`Provide Scraping Browsers credentials in AUTH` +

` environment variable or update the script.`

);

}

// This is our Brightdata Proxy URL endpoint that we can use

const endpointURL = `wss://${AUTH}@brd.superproxy.io:9222`;

/**

* This is where the magic happens. Here, we connect to a remote browser instance fortified by Brightdata.

* They utlulize all the methods we talked about and more to make the Chromium instance evade detection effectively.

*/

const browser = await playwright.chromium.connectOverCDP(endpointURL);

// Now we have instance of chromium browser that is fortfied we can navigate to our desired url.

try {

console.log(`Connected! Navigating to ${url}...`);

const page = await browser.newPage();

await page.goto(url, { timeout: 2 * 60 * 1000 });

console.log(`Navigated! Scraping page content...`);

const data = await page.content();

console.log(`Scraped! Data: ${data}`);

} finally {

await browser.close();

}

}

if (require.main == module) {

scrape().catch((error) => {

console.error(error.stack || error.message || error);

process.exit(1);

});

}

- The script starts by checking if the necessary authentication details for the Brightdata proxy service are provided. If not, it throws an error.

- Next, it connects to a Chromium browser instance through the Brightdata proxy using Playwright’s connectOverCDP method.

- Brightdata fortifies this connection, which means it’s configured to evade common bot detection techniques.

- Once connected, the script navigates to the target URL and retrieves the page content.

Even though this is a great solution, it is very expensive to implement on a large scale.

3. Fortify Playwright Yourself

Fortifying Playwright involves making your automated browser behavior appear more like a real user and less like a bot.

By implementing different techniques, you can make your Playwright scripts more robust and stealthy, reducing the likelihood of being detected as a bot by websites.

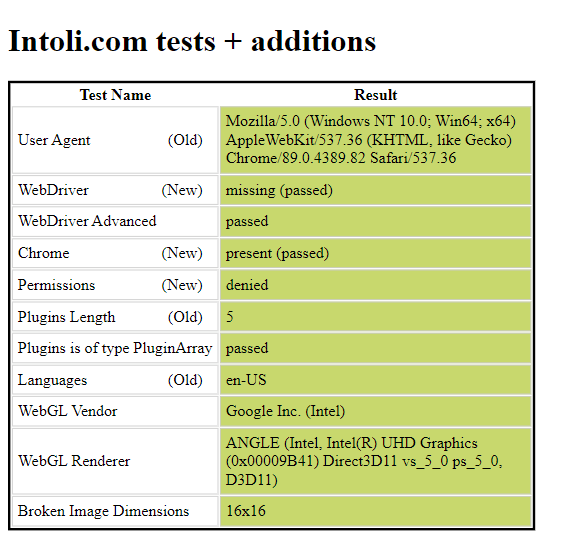

Let us visit bot.sannysoft with a Playwright script that we fortified:

const { chromium } = require("playwright");

(async () => {

const browser = await chromium.launch({

// Set arguments to mimic real browser

args: [

"--disable-blink-features=AutomationControlled",

"--disable-extensions",

"--disable-infobars",

"--enable-automation",

"--no-first-run",

"--enable-webgl",

],

ignoreHTTPSErrors: true,

headless: false,

});

const context = await browser.newContext({

// Emulate user behavior by setting up userAgent and viewport

userAgent:

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36",

viewport: { width: 1280, height: 720 },

geolocation: { longitude: 12.4924, latitude: 41.8902 }, // Example: Rome, Italy

permissions: ["geolocation"],

});

const page = await context.newPage();

await page.goto("https://bot.sannysoft.com");

await page.screenshot({ path: "screenshot.png" });

await browser.close();

})();

| Flag | Description |

|---|---|

-disable-blink-features=AutomationControlled | This flag disables the JavaScript property from being true. Some websites use this property to detect if the browser is being controlled by automation tools like Puppeteer or Playwright. navigator.webdriver |

--disable-extensions | This flag disables all Chrome extensions. Extensions can interfere with the behavior of the browser and the webpage, so it’s often best to disable them when automating browser tasks. |

--disable-infobars | This flag disables infobars on the top of the browser window, such as the Chrome is being controlled by automated test software infobar. |

--enable-automation | This flag enables automation-related APIs in Chrome. |

--no-first-run | This flag skips the first-run experience in Chrome, which is a series of setup steps shown the first time Chrome is launched. |

--enable-webgl | This flag enables WebGL in a browser. |

We can now run our fortified Playwright script to get an output of.

As demonstrated, we got the same result as we did with the Playwright-stealth library. The configuration of our Playwright script can be tailored based on the specific website and the degree of its anti-bot defenses.

We can also enhance our applications by adding rotating residential proxies to make it harder for bot detectors to spot us.

By experimenting with various strategies tailored to our unique requirements, we can achieve an optimal setup robust enough to bypass the protective measures of a website we want to scrape.

4. Leverage ScrapeOps Proxy to Bypass Anti-Bots

Depending on your use case, leveraging a proxy solution with built-in anti-bot bypasses can be a simpler and more cost-effective approach compared to manually optimizing your Playwright scrapers. This way, you can focus on extracting the data you need without worrying about making your bot undetectable.

ScrapeOps Proxy Aggregator is another proxy solution to make Puppeteer undetectable to bot detectors even complex one's like cloudflare.

- Sign Up for ScrapeOps Proxy Aggregator: Start by signing up for ScrapeOps Proxy Aggregator and obtain your API key.

- Installation and Configuration: Install Playwright and set up your scraping environment.

- Initialize Playwright with ScrapeOps Proxy: Set up Playwright to use ScrapeOps Proxy Aggregator by providing the proxy URL and authentication credentials.

Below, we will see how to use Playwright with ScrapeOps to get the ultimate web scraping application.

const { chromium } = require("playwright");

// Your ScrapeOps API key

const API_KEY = "Your API KEY";

// Function to convert regular URLs to ScrapeOps URLs

function getScrapeOpsUrl(url) {

const payload = { api_key: API_KEY, url: url, bypass: "cloudflare_level_1" };

const queryString = new URLSearchParams(payload).toString();

const proxy_url = `https://proxy.scrapeops.io/v1/?${queryString}`;

return proxy_url;

}

// Main function to scrape the data

async function main() {

const browser = await chromium.launch({ headless: false });

const page = await browser.newPage();

await page.goto(getScrapeOpsUrl("https://bot.sannysoft.com"));

await page.screenshot({ path: "screenshot.png" });

await browser.close();

}

main();

ScrapeOps offers a variety of bypass levels to navigate through different anti-bot systems.

These bypasses range from generic ones, designed to handle a wide spectrum of anti-bot systems, to more specific ones tailored for platforms like Cloudflare and Datadome.

Each bypass level is crafted to tackle a certain complexity of anti-bot mechanisms, making it possible to scrape data from websites with varying degrees of bot protection.

| Bypass Level | Description | Typical Use Case |

|---|---|---|

generic_level_1 | Simple anti-bot bypass | Low-level bot protections |

generic_level_2 | Advanced configuration for common bot detections | Medium-level bot protections |

generic_level_3 | High complexity bypass for tough detections | High-level bot protections |

cloudflare | Specific for Cloudflare protected sites | Sites behind Cloudflare |

incapsula | Targets Incapsula protected sites | Sites using Incapsula for security |

perimeterx | Bypasses PerimeterX protections | Sites with PerimeterX security |

datadome | Designed for DataDome protected sites | Sites using DataDome |

With ScrapeOps, you don’t have to worry about maintaining your script’s anti-bot bypassing techniques, as it handles these complexities for you.